3.5 Data and Algorithm Ethics

Basic Terms of Data Ethics

Test your prior knowledge of basic terms about data ethics here.

Ethics Quiz: Who should decide?

Terminology: Data and Algorithm Ethics

Watch the following video as an introduction to data ethics.

Multiple Choice

Data Problems

How bad data keeps us from good AI

Watch the following from minute 5:38 on.

How I fight Bias in Algorithms

Watch the following video on how to fight bias in algorithms.

Facebook Algorithm: https://algorithmwatch.org/en/automated-discrimination-facebook-google/

Application Task

Morality and Decisions

Interview with Prof. Dr. Joanna Bryson about Morality and Algorithms

Watch the following interview and do the comprehension task.

(Course: Daten- und Algorithmenethik, Episode 3)

Comprehension task: How does the video define and problematize the terms „decision“ and „moral agency“?

Sociotechnical Systems

Reflection on the entry exercise:

Have you changed your mind? Who should make the decisions? Man or machine?

Case study: Automated applicant management

Basis for synchronous lesson

The use of artificial intelligence in the hiring process has increased in recent years with companies turning to automated assessments, digital interviews, and data analytics to parse through resumes and screen candidates. But as IT strives for better diversity, equity, and inclusion (DEI), it turns out AI can do more harm than good if companies aren’t strategic and thoughtful about how they implement the technology.

Wendy Rentschler, head of corporate social responsibility, diversity, equity, and inclusion at BMC Software, is keenly aware of the potential negatives that AI can bring to the hiring process. She points to an infamous case of Amazon’s attempt at developing an AI recruiting tool as a prime example: The company had to shut the project down because the algorithm discriminated against women. “If the largest and greatest software company can’t do it, I give great pause to all the HR tech and their claims of being able to do it,” says Rentschler.

Some AI hiring software companies make big claims, but whether their software can help determine the right candidate remains to be seen. The technology can help companies streamline the hiring process and find new ways of identifying qualified candidates using AI, but it’s important not to let lofty claims cloud judgment.

Source:

https://www.cio.com/article/189212/ai-in-hiring-might-do-more-harm-than-good.html

Task:

Take on the perspective or role of the company, the programmer and the user:

- For each role, write down two pro and con arguments for automated applicant management!

- What motives could lie behind why decisions such as these are delegated to algorithms? Come up with two motives for each role!

- What positive and negative consequences can result from this and who bears responsibility for this?

Case Study: Legal System

Read the excerpt from the book „Ein Algorithmus hat kein Taktgefühl“ (engl.: „An algorithm has no sense of tact“) by Katharina Zweig as a basis for the synchronous lecture and form an opinion.

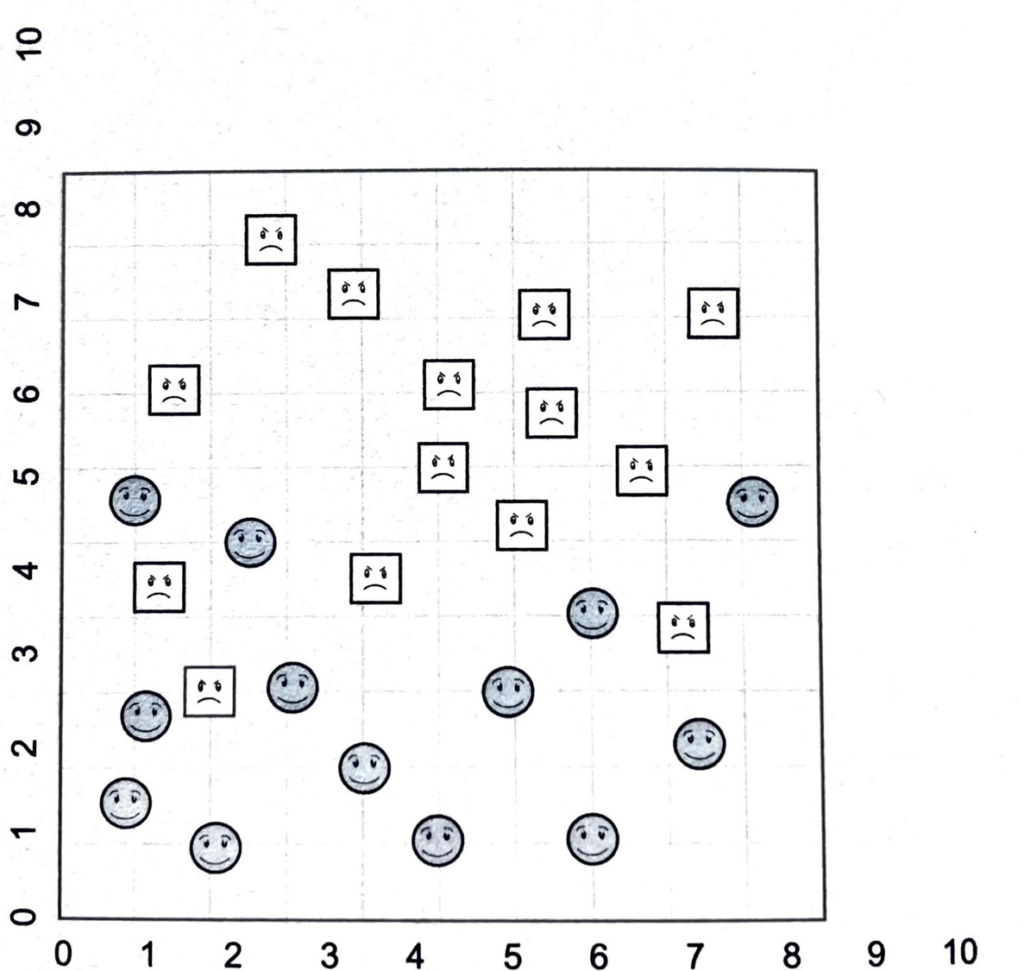

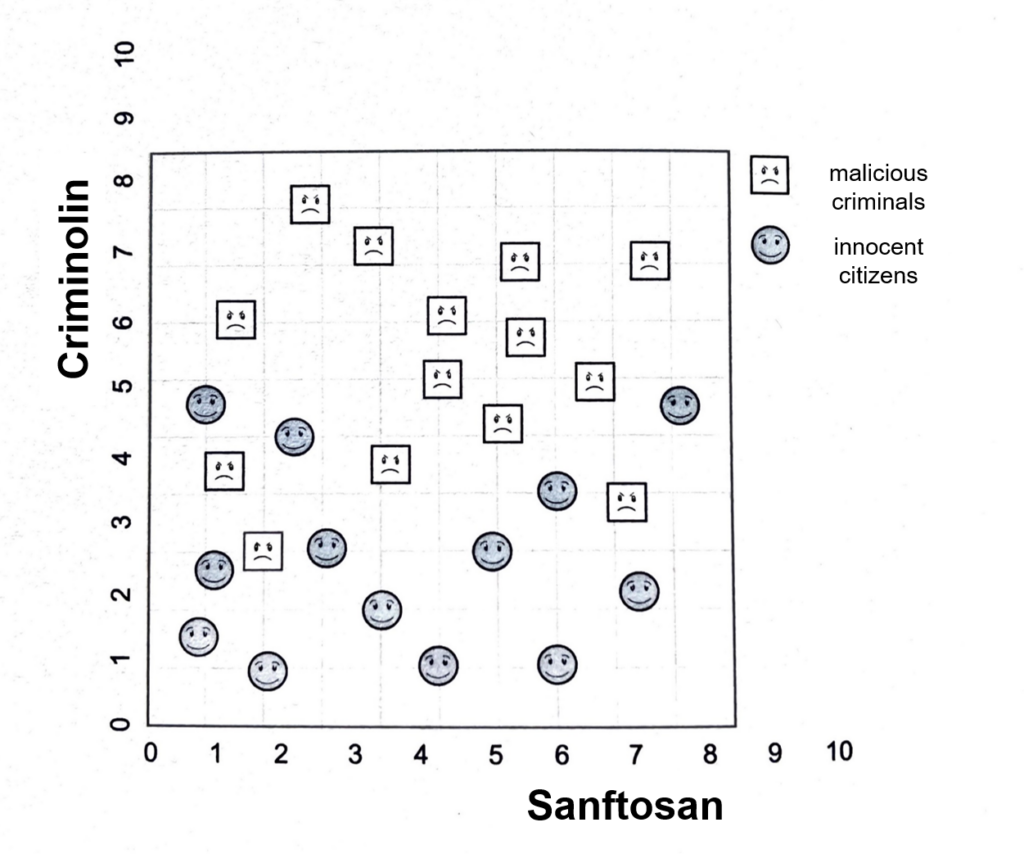

As a basis it is important to understand the theory of the so-called support vector machine. During the training of this machine it must be decided in what way data is assigned to two different groups. In the illustration below it must be decided how a straight line should be drawn to separate the square and the circular smileys as well as possible. The number of errors should be as small as possible.

Imagine that we could allegedly predict to some extent whether someone would become a criminal or not on the basis of two hormones found in the blood. One hormone is called „criminolin“, the other „sanftosan“. The square smileys are bright and represent male and female criminals, the circular gray smileys are innocent citizens. The data situation is this: Again, draw your dividing line through the data points, which will then be used to classify more people in the next step […].

In fact, this is a difficult balancing act: On the one hand, society has a legitimate interest in identifying as many criminals as possible. But, of course, innocent people should be protected. As early as 1760, the legal philosopher William Blackstone therefore coined the following maxim: „It is better for ten guilty people to escape than for one innocent person to suffer.“92 Dick Cheney, ex-Vice President of the United States, on the other hand, once expressed the following opinion in an interview on the CIA’s torture report on conditions at Guantanamo Bay and other locations in 2014: „I’m more concerned with the bad guys who got out and released than I am with a few that, in fact, were innocent.“ Reporter Chuck Todd echoed whether that would hold true given the estimated 25 percent of innocent people who had been illegally detained. „I have no problem as long as we achieve our objective“93 was Cheney’s response. The moral basis of the optimization function, then, would let the two make very different choices: Blackstone would draw the line in such a way that no innocent person would end up on the wrong side and Cheney in such a way that all criminals would really be caught.94

This example shows that the choice of a measure of quality always involves a moral tradeoff, namely which wrong decision is more serious. It is impossible to not make a decision on this. For example, the above-mentioned accuracy implicitly determines that the mistakes are equally bad: What counts are the correct decisions and whether a person was correctly labeled a criminal or correctly labeled an innocent citizen carries no weight. Both correct decisions are worth the same amount. As you can see from Blackstone and Cheney, you can change this by introducing weights. But who exactly is going to determine those and how? So, here we have another cog, a new scale that is always ethical in nature and is never „neutral“ or „objective.“ The only question is: Do you weight more like Blackstone? Or the way Cheney does? The choice is yours!

Source: Zweig, Katharina (2019): Ein Algorithmus hat kein Taktgefühl. Wo künstliche Intelligenz sich irrt, warum uns das betrifft und was wir dagegen tun können, 5. Edition, Wilhelm Heyne Verlag, München, pp. 164 f.

92 William Blackstone: Commentaries on the Laws of England, Band IV, 1765, 21. Edition, Weet, Maxwell, Stevens, & Norton, London (1844), S. 358

93 Transcript of an Interview by Chuck Todd (NBC) with Dick Cheney and other politicians. Transcript of the December 14, 2014 broadcast of „Meet the Press,“ available at: https://www.nbcnews.com/meet-the-press/meet-press-transcript-december-14-2014-n268181

94 The idea of contrasting these two quotes comes from a video by Cris Moore on the limited utility of computers in science and society, worth watching, available at: https://youtube.com/watch?v=Sg2jtEY6qms

Summary: 6 Types of Ethical Concerns

Mittelstadt et al. (2016) provide a helpful suggestion for systematizing and structuring ethical problems. The authors name and categorize six types of ethical concerns with regard to algorithms, which are summarized here very briefly.

Source:

Mittelstadt, B. D., Allo, P., Taddeo, M., Wachter, S., & Floridi, L. (2016). The ethics of algorithms: Mapping the debate. Big Data & Society, 3(2), 2053951716679679.